Why Cache Storage is 80times Faster Than Disk Storage (HDDs)

OVERVIEW

Why Cache Storage is 80x Faster Than Disk Storage (HDDs)

Latency tests reveal in-memory cache storage is over 80times faster than HDDs.

OVERVIEW

In programming and computer science, finding the optimal way to store and retrieve data is a great concern. While there are many different types of storage systems, choosing the best one often times depends on your unique use case.

SCOPE

This post aims to highlight and compare different storage devices and give optimal recommendations based on use cases and limitations of proceeding with each.

As the title suggests, cache memory is 80times faster than Hard Disk Storage. but why, you may ask? or how? please read on.

First off, what is cache memory? and what types of storage exist?

Let's start with some basic definitions of terms used in this article.

- Latency: Latency is simply the time it takes to retrieve data.

- Cache: Cache is an in-memory storage service.

- SSDs: Solid State Drives

- HDDs: Hard Disk Drives

There are a few types of storage services offered consistently across most cloud providers. These include the following:

- Object storage

- File storage

- Block storage

- Caches

Object storage: This type of storage manages data in terms of blobs or objects. Each object is individually addressable by a URL. While these objects are files, they are not stored in a conventional file system. An example of object storage is GCP’s cloud storage or Amazon S3. While object storage improves availability, durability, and is oftentimes not limited by the size of the disk available, they also are serverless and therefore do not require you to create VMs and manage available storage space. Object storage typically supports HTTP access to its objects organized in buckets with security access managed independently.

However, the limitations of such storage lie in its high latency, and the fact that they do not allow you to alter a small piece of data blob, you must read/write the entire objects at once, thereby making object storage unsuitable for database related operations.

File Storage: File storage provides network shared file storage to VMs. Google's cloud file storage service is called Cloud Filestore. File storage is suitable for applications that require operating system–like file access to files.

Block Storage: Block storage uses a fixed size data structure called blocks to organize data. In Linux operating systems, 4kb is the common block size for its file system. However, block sizes of 8kb are often used by relational databases that are designed to access block storage directly. It also supports operating systems and/or filesystem-level access.

Caches: Caches are in-memory data stores that maintain/provide fast access to data. The latency of in-memory stores is designed to be submillisecond making them the fastest data store after SSDs.

Now, let's look at the results of some latency experiments.

Latency Tests

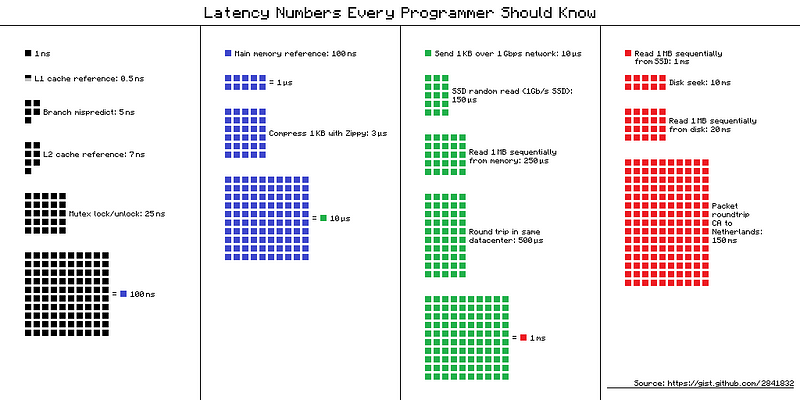

Latency tests carried out across the key data storage types HDD, SSD and in-memory Caches reveal the following.

Note: 1000 nanoseconds = 1microsecond

1000microseconds = 1millisecond

1000milliseconds = 1second

- Reading 4KB randomly from an SSD takes 150 microseconds

- Reading 1MB sequentially from cache memory takes 250 microseconds

- Reading 1MB sequentially from an SSD takes 1,000 microseconds or 1 millisecond

- Reading 1MB sequentially from disk (HDDs) takes 20,000 microseconds or 20 milliseconds,

Thus reading from disk takes 80 times longer than reading from an in-memory cache!

its basic math. HDDs require about (20milliseconds to read 1MB) thus are twenty times(20) times slower than their counterparts, SSDs which only take 1millisecond to read the same amount of data. Cache memory, on the other hand, is four (4) times faster than SSDs as they take just about 250microseconds to sequentially read the same amount of data.

More information on the above analysis of latency can be found here. by Jonas Bonér’s titled “Latency Numbers, Every Programmer Should Know”.

Let’s work through the above example of reading 1MB of data. If you have the data stored in an in-memory cache, you can retrieve the data in 250 microseconds or 0.25 milliseconds. If that same data is stored on an SSD, it will take four times as long to retrieve it at 1 millisecond. If you are retrieving the same data from a hard disk drive, you can expect to wait 20 milliseconds or 80 times as long as reading from an in-memory cache.

Caches are quite helpful when you need to keep read latency to a minimum in your application. Of course, who doesn’t love fast retrieval times? Why don’t we always store our data in caches you may ask? Well, there are three (3) key reasons why.

- Memory is more expensive than SSDs or hard disk drive (HDDs) storage. It’s not practical in many cases to have as much in-memory storage as persistent block storage on SSDs or HDDs.

- Caches are volatile; This means you lose the data stored in the cache when power is lost or the operating system is rebooted. Data should be stored in a cache for fast access only, but it should never be used as the only data store keeping the data. Some form of persistent storage should be used to maintain a “system of truth,” or a data store that always has the latest and most accurate version of the data.

- One major issue with caches is that they can get out of synchronization with the system of truth. This can happen if the system of truth is updated but the new data is not written to the cache. When this happens, it can be difficult for an application that depends on the cache to detect the fact that data in the cache is invalid. If you decide to use a cache, be sure to design a cache update strategy that meets your requirements for consistency between the cache and the system of truth.

This is such a challenging design problem that it has become memorialized. According to Phil Karlton, “There are only two hard things in computer science: cache invalidation and naming things.” Click here to read more about this rare example of Computer Science Humor.

Users expect web applications to be highly responsive. If a page takes more than 2 to 3 seconds to load, the user experience can suffer. In the database world, when a query is made to the database, the database engine will look up the data, which is usually on disk. The more users query the database the more queries it has to serve. Databases keep a queue for queries that need to be answered but can’t be processed yet because the database is busy with other queries. This can cause longer latency response time since the web application will have to wait for the database to return the query results.

One way to reduce latency is to reduce the time needed to read the data. In some cases, it helps to replace hard disk drives with faster SSD drives. However, if the volume of queries is high enough that the queue of queries is long even with SSDs, the next best option is to use an in-memory cache.

When query results are fetched, they are stored in the cache. The next time that information is needed, it is fetched from the cache instead of the database. This can reduce latency because data is fetched from memory, which is faster than disk. It also reduces the number of queries to the database, so queries that can’t be answered by looking up data in the cache won’t have to wait as long in the query queue before being processed.

Hopefully, the above steps provide clarity and help hasten your thought process, the next time you need to make a decision on the type of storage you need for your applications.

Please leave any questions below in the comments and I will be happy to respond to them.

Cheers!