Always ask ‘What If?’

The SRE mindset of continuous learning

The SRE mindset of continuous learning

In 1968, Margaret Hamilton was working on the Apollo 7 Computer Guidance Software (AGC) to take astronauts to the moon. Margaret will take her daughter to work on some nights and weekends, while her daughter will watch her test the software.

Margaret’s daughter, Lauren, came to work with her one day, while the team was running mission scenarios on the hybrid simulation computer. As a young inquisitive mind herself, Lauren went exploring and playing Astronaut, but eventually caused a mission to crash. She had selected the P01 program while in-flight. This action wipes out the flight navigation data, making it impossible for the AGC to pilot the craft back to earth.

This exposed to Margaret what would have happened if the prelaunch program P01 were inadvertently selected by a real astronaut during a real mission, and she thought to herself, “What if the astronaut did what her daughter just did in flight?”

Margaret, in her SRE instincts, went to the management of NASA highlighting this and submitted a program change request to add an error checking code in the onboard flight software in case an astronaut incidentally happen to select the P01 during flight, but she was told that it wouldn’t ever happen. The astronauts were well-trained and don’t make mistakes. So, instead of adding an error checking code, Margaret updated the mission specifications documentation to say the equivalent of “Do not select P01 during flight”. This change was deemed unnecessary by many on the project, owing to their beliefs that Astronauts were trained to be perfect.

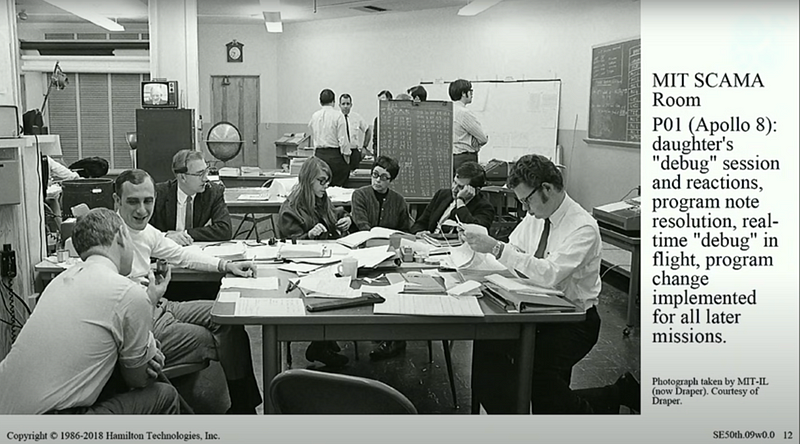

Well, Margaret’s suggested safeguard was only considered unnecessary until the very next mission. On Apollo 8, days after the specifications update. During mid-course on the fourth day of flight with the astronauts Jim Lovell, William Anders, and Frank Borman on board, Jim Lovell selected P01 by mistake, as it happens on a Christmas Day, creating so much havoc for all involved. This was a critical problem because in the absence of a workaround, no navigation data meant the astronauts were never coming home. This took hours of effort, but thankfully, the documentation update had explicitly called this possibility out, and was invaluable in figuring out how to upload usable data and recover the mission, with not much time to spare.

The change request to add error detection and recovery to the prelaunch program P01 was approved shortly afterwards and added to all later flights.

According to Margaret, “A thorough understanding of how to operate a system is not enough to prevent human errors”.

Margaret and her team were solving problems that had never been solved before, and it must have been very challenging. But only by asking “What if” can we uncover questions and solutions vital for our continued success in building reliable software systems. The Google SRE team consider Margaret Hamilton as having significant traits of the first SRE. Always learning from everyone and everything.

Thoroughness, dedication, belief in the value of preparation and documentation, and an awareness of what could go wrong, coupled with a strong desire to prevent it is the SRE mindset.

Till next time.

Stay safe!

References

- Google Site Reliability Engineering (Beyer, Jones, Petoff & Murphy)

- International Conference of Software Engineering, 2018.